Fan of hexagonal architecture and its promises for a very long time, I often spent time to understand it, explain it but also to demystify its implementation. On the other hand, I've often limited my explanations to the simple cases. What we call the “happy path” where everything goes well. In this first article of an upcoming miniseries, I want to talk about some implementation details as well as traps in which it is very easy to fall. Finally, I will take some time to talk about how to test your hexagon, suggesting a non-orthodox strategy. The idea being to save you a little time during your implementations of this incredibly useful architectural pattern.

The "non-cohesive right-side adapter" anti-pattern

We will be starting today with a design-smell: the non-cohesive

right-side adapter. If you do not know hexagonal architecture yet (a.k.a.:

ports and adapters), I suggest you start by reading my previous post on the subject which summarizes all this. Because to explain the non-cohesive

right adapter anti-pattern, we are just going to recall here a few fundamentals

about Alistair COCKBURN’s hexagonal architecture:

- We split our software in 2 distinct regions:

- The inside (for the Domain / business code that we call "the Hexagon")

- The outside (for the Infrastructure / tech code)

- To enter the Hexagon and interact with the domain, we talk to a port that we call "driver port" or "left-side port"

- The domain code will then use one or more third-party systems (data stores, web APIs, etc) to gather information or trigger side-effects. To do so, the business code must remain at its business-level semantic. It is done by using one or more "driven ports" or "right-side ports".

- A port is therefore an interface belonging to the Domain using business semantics to express either requests that we address to our system (left-side port) or external interactions that it performs along the way to achieve its goal (right-side port). I like to see ports as drawbridges we are using to come and go between the infrastructure side and the Domain one (analogy that Cyrille MARTRAIRE had whispered to me a very long time ago)

- In terms of dependency, the infrastructure code knows and references the Domain one. But let's be more precise here (BTW, thank you for your feedback Alistair ;-): only the left-side infrastructure code knows the Domain actually (to interact with it through a left-side port).

- On the other hand, the Domain (business) code must never reference any technical/infrastructure-level code. It works at runtime thanks to the Dependency Inversion principle, that Gerard MESZAROS called once "configurable dependencies" (which lends itself very well to Alistair's pattern)

- An adapter is a piece of code which makes it possible to pass from one world to another (infra => domain or domain => infra) and which takes care in particular of converting the data structures of a world ( ex: JSON or DTO) to the other (ex: POJO or POCO of the domain)

- There are 2 kind of adapters:

- "Left-side adapters" which use a left-side port to interact with the Domain (thus having an aggregation-type relationship with it)

- "Right-side adapters" which implement a right-side port instance

The problem with the right wing...

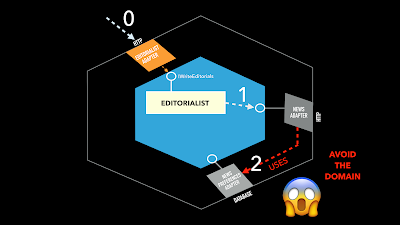

You may have noticed here that I did not say that a right-side

adapter implements multiple right-side ports. It was intentional.

Because if you did, there is a good chance that you will land on the

anti-pattern that I want to mention here: the non-cohesive right adapter.

This is what happens when you give too much

responsibility to a right-side adapter: you risk then deporting part of the

Domain logic of your hexagon into one of its peripheral elements on the

infrastructure side (i.e. the adapter). This is what happens especially when an

adapter references and uses another adapter directly.

Of course, this is something I strongly advise against doing. Indeed,

you need to keep all the business orchestration logic at the Domain level and

not to distribute it here and there at the infrastructure code level. But since

I've seen that everyone at least falls into this trap once, it's worth

mentioning. Avoid this situation if you don't want to end up with an anemic Domain.

(click on the image to zoom)

(click on the image to zoom)

Worst. In that case, those who test only the center of the hexagon (i.e.

the domain code) by stubbing the adapters all around would miss out on lots of

code and potential bugs. Those would be tucked away in your untested adapters.

It is indeed extremely easy to deceive yourself by putting a lot of

implicit in the behaviours of your adapter stubs. You will very often put in

those stubs what you think the adapters will do or should do. But the risk

afterwards is to fail to implement the concrete adapter properly (when you will

forget all the implicit you initially put in your stubs in the first place).

This will give you a false confidence with a fully green test harness, but

potentially buggy behaviours in production (when using the real concrete

right-side adapters).

Let's talk a little bit about test strategy

I have already written and spoken on the issue but since I have not yet

translated into English my most specific article on the subject, I will just clarify here some essential terms to

understand the rest of the discussion.

After more than 15 years of practicing TDD at work, I have arrived

over the years to a thrifty form of outside-in TDD which suits 90% of my

projects and contexts very well. Outside-in means that I consider my system

(often a web API) as a whole and from the outside. A bit like a big black box

that I’m going to grow and improve gradually by writing acceptance tests that will

drive its behaviours and reliefs (see the fantastic GOOS book from Nat Pryce and Steve Freeman if you want to know more). Those acceptance tests aren’t interested

in implementation details (i.e. how the inside of the black-box is coded)

but just on how to interact with the box in order to have the expected

reactions and behaviours. This lets me easily change the internal

implementation of my black box whenever I want without slowing me down or

breaking fragile tests.

My acceptance tests are therefore coarse grained tests covering the full

box (here, the entire

hexagon) but not using real technical protocols neither real data stores in

order to be the fastest possible (I'm addicted to short feedbacks and thus

use a tool to run my tests every time I change a line of code).

Besides these acceptance tests which constitute 80% of my strategy, I

generally write some fine-grained unit tests (the small tests of the

"double loop" when I need them), some integration tests, and some

contract tests for my stubs to check that they behaves like the real external

dependencies they substitute with (and also to detect when something change

externally). The rest of this article will mainly talk about my coarse-grained

acceptance tests.

The best possible trade-off

This parenthesis about testing terminology and my default test strategy

being closed, let's focus on our initial problem: how to avoid writing

incomplete tests allowing bugs to happen at runtime when we deploy our system

on our server.

My recommendation: write acceptance tests that cover your entire

hexagon, except for the I/O at the border. Yes, you heard me right: I

advise you to write acceptance tests that use all your concrete adapters and

not stubs for them. The only thing you will have to stub are network calls,

disk or database access which are made by your adapters. By doing so, you test

all your code in full assembly mode (without illusions or unpleasant surprises

in production therefore), but blazing fast ;-)

(click on the image to zoom)

(click on the image to zoom)

Can you give me an Example?

Sure. Let's take an Example to make the implicit, explicit.

Imagine that you have to code a sitemap.xml file generator for the SEO

of a web platform that has a completely dynamic content controlled by several

business lines (e-biz, marketing). This hexagonal micro-service could be a web

API called daily in order to regenerate all sitemaps of the related website.

You call a HTTP POST request, and all the sitemap files are then updated and

published. (as a side-note: Please, don't tell my mum that I mentioned

microservices in one of my articles ;-)

Okay. Well instead of writing acceptance tests like this where every adapter is stubbed:

I rather advise you to write acceptance tests in which you will include

your concrete adapters and only stub their last-mile I/O. It is worth mentioning here

that I’m using my ASP.NET web controller as my left-side adapter. Anyway, it

looks like this:

(click on the image to zoom the code)

There is a better way

Of course, in order to have readable, concise (no more than 10/15 lines

long) and expressive acceptance tests (i.e. by seeing clearly the values

transmitted to your stubs), you will generally go through builders for your

stubs, mocks. You can also use them to build your hexagon (which is a 3 steps

initialization process). I didn't show it earlier because it would have been

less clear for my example but a target version might look like this:

(click on the image to zoom the code)

A good adapter is a pretty dumb adapter

Expected objections

People generally agree on the need to keep the domain in the center of the Hexagon. On the other hand, I've got some objections related to my testing strategy. Let's review some of them.

First, it could be objected here that my acceptance tests are a bit hybrid and that they cover both Domain considerations but also technical ones. Not very orthodox...

First, it could be objected here that my acceptance tests are a bit hybrid and that they cover both Domain considerations but also technical ones. Not very orthodox...

Well... actually I don't realy care about orthodoxy or by-the-book-ism ;-) As soon as you can explain your choices and the trade-offs you, I'm ok with that.

Moreover it can be completely transparent if you make sure that each of your

acceptance tests covers business behaviours of your system (service / API /

microservice...). It's up to you to work on the expressiveness of your tests:

their names, their simplicity, their ubiquitous language, but also the

conciseness of the "arrange" steps (using DSL-like expressivity for

example).

I've landed on this new strategy this year after tons of trials and

errors. Before that I was implementing the very same test strategy often put

forward by the London friends from Cucumber (Seb Rose, Steve Tooke and Aslak Hellesøy). That one aims to combine:

- A lot of acceptance tests but for which we stub all the adapters (thus blazing fast tests)

- And some additional integration/contract tests aside, in another project. These contract tests verify that our adapter stubs used in our acceptance tests have exactly the same behaviour as their real concrete adapters (the one that we package and deliver with our hexagon in production). These last integration tests are therefore much slower and run more rarely (mainly on the dev factory, not within my local NCrunch automatic runner). But no surprise here: cause it's an expected trade-off

So, we have had a really interesting situation here, which allowed us to

have tons of super-fast acceptance tests AND to be confident enough about our

right-side adapter stubs to be true to reality enough (for some reference

scenarios).

I talk about it in the past-tense here because I haven't really managed to be

confident enough with this setup during my various experiences with different

teams.

Blind spot

Indeed, I regularly had problems with this strategy because we didn't cover enough cases or errors or exceptions within those contract tests of our right-side adapters. Too many happy paths (basic scenarios where everything goes well), not enough corner cases and exceptions in those contract tests. To put it another way, our acceptance tests were asking our stubs on many more cases than what was planned in their contract tests.

We developers are attracted to the happy path as much as moths are to a

lit bulb. It seems to be one

of our determinism (quite the opposite of QA people ;-) I knew that already.

But I observed these situations carefully to try to understand what had made us

fail here (in my various teams and contexts). I came to the conclusion that it

was because these integration tests - in addition to being very "plumbing

oriented" (more legacy-oriented than domain-oriented) - were much slower

to run than our unit or acceptance ones. Every time you add a new parameter

combination, it increases the overall test harness execution latency. That’s

why my people paid less attention to it. A little bit in mode: "anyway,

it's an integration test for a stub... it should do the job but we're not going

to spend too much time and effort on it either".

As a consequence, we used to test a lot less case combinations within

these contract tests for our stubs than what we used to do in our other tests

(i.e. coarse-grained acceptance tests or fine-grained unit tests).

Stubbing less

The side-effect of this was that combination of contract tests and acceptance tests was not sufficient, to manage to catch all the bugs or plumbing problems in our final assembly. It is therefore for these reasons that I finally arrived at the test strategy that I presented to you in this article, and which includes the concrete adapters in our acceptance tests. On the other hand, I continue to use this strategy of testing contracts for external components or third-party APIs. But now I stub less things, my stubs only cover a very fine part of my system.One can Pick hexagonal architecture for different reasons

This one is important. As a final warning, I should also point out that my

testing strategy has been designed and worked well in my contexts so far. Of

course, I’m not saying here that this is a one size fits all situation

(I don’t even think that such a situation exists).

In particular, my contexts aren’t the one faced by Alistair when he

created the pattern. Back in the days, Alistair had to find a solution in order

to survive with a huge number of connectivity and technologies, to avoid his

Domain logic to suffer from a combinatorial explosion (for a weather forecast

system).

At work, I mainly use hexagonal architecture pattern because it

allows me to split my business code from my technical one. Not to combine

or easily switch my left-side technologies and adapters. Reason why I

usually have a unique exposure for my business services which fits exactly my

purpose and context: REST-like HTTP for a web API, Graph QL sometimes for website

backends, Aeron middleware for a low latency service, AMQP-based middleware for

some HA, resilient and scalable services, RPC for... (no, I'm kidding, I hate

so much the RPC paradigm ;-)

More than that, it is very likely that the communication middleware I

picked has more impacts on my business code interactions (left-side port) than

a simple switch of Adapters. In some cases, the middleware technology I use may

even impact my programming paradigm (event-driven reactive

programming/Classical OOP/FP/lame OOP/transaction script). Reason why my

left-side port may often become a leaky abstraction.

As a consequence, I only need one adapter on the left side. This saves me from having to run all

my acceptance tests as many times as I would have different left-side exposure

technologies. That is worth mentioning (at least for people that would have a

similar objective that Alistair had).

And when my goal is to build a REST-like web APIs, I even use my web

controllers as left-side adapters instead of creating another ad-hoc adapter

type (this is something we are legion to do, if I recall Everyone who chatted

with me during conferences or my DDD training sessions).

An interesting side-effect

The technique I was promoting here to test everything but not the I/O

will put you in a very comfortable situation at the end of the day. Indeed:

- your acceptance tests will cover a base code very faithful to the reality of the production (my main driver here)

- your acceptance test harness will allow you to calmly refactor your code base in the event that you have made a mistake and put some business behaviour into your adapters. Thanks to this test harness covering everything, the move of the wrongly located business code from the right-side Adapter to the Domain can be done without any risk.

Perhaps we could find an easy way (other than pairing or code review) to

prevent less experienced people from falling into this trap. For the moment,

this ability to easily refactor -a posteriori- when we screwed this (or when we

took a little short-term technical debt), was more than enough for my projects.

Conclusion

Since this post is long enough, I’ll be brief:

- Always go through the center of the hexagon, do not connect your right-side adapters to each other

- Do not code YOUR domain logic or YOUR domain orchestration logic in your adapters

- Test your entire hexagon (including adapters). To do so, only stub your last miles I/O from your right-side adapters.

- Aside, keep testing your test doubles or stubs of external dependencies against real implementations via contract-based integration tests.

The next 2 articles in this series dedicated to hexagonal architecture

will talk about the subject of health checks (how to know if our hexagon is in

shape or not) but also about the comparison with an alternative to the pattern:

Functional Core (with imperative shell).

Happy Coding! See you soon.

Thomas

Hi !

ReplyDeleteFirst of all thanks for this interesting read. It's always nice to read from other people's experience when it comes to such topics.

I had a few questions though :

Do we agree that with your newest testing strategy one downside is that it makes you write stub data that depend on the actual driven infrastructure (on the right-side of the hexagon) ? In practice I guess it's not such a pain as switching from an infra to another isn't so frequent.

Do you have an example of implementation of your new strategy (given https://github.com/42skillz/kata-TrainReservation was about your former strategy) ?

Also I would be interested in reading your follow-up article about "Functional Core (with imperative shell)." : when you say it's an "alternative to the pattern", you mean an alternative to health checks or the hexa architecture ? For me it goes pretty well hand-in-hand with hexa pattern so I'm not sure I understand.

Thanks!